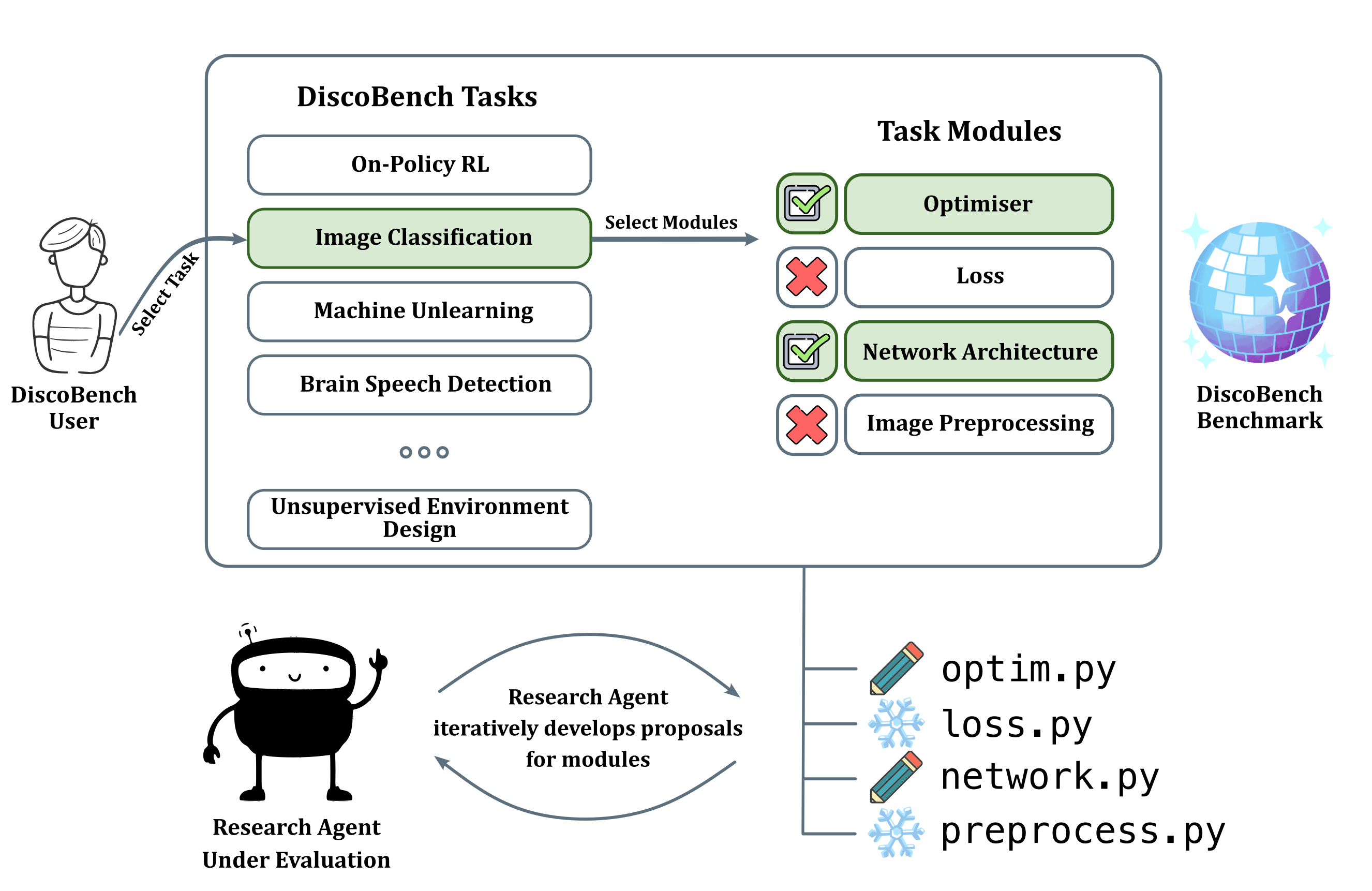

- December 2025: We have released DiscBench, a novel Open-Ended Benchmark For Algorithm Discovery.

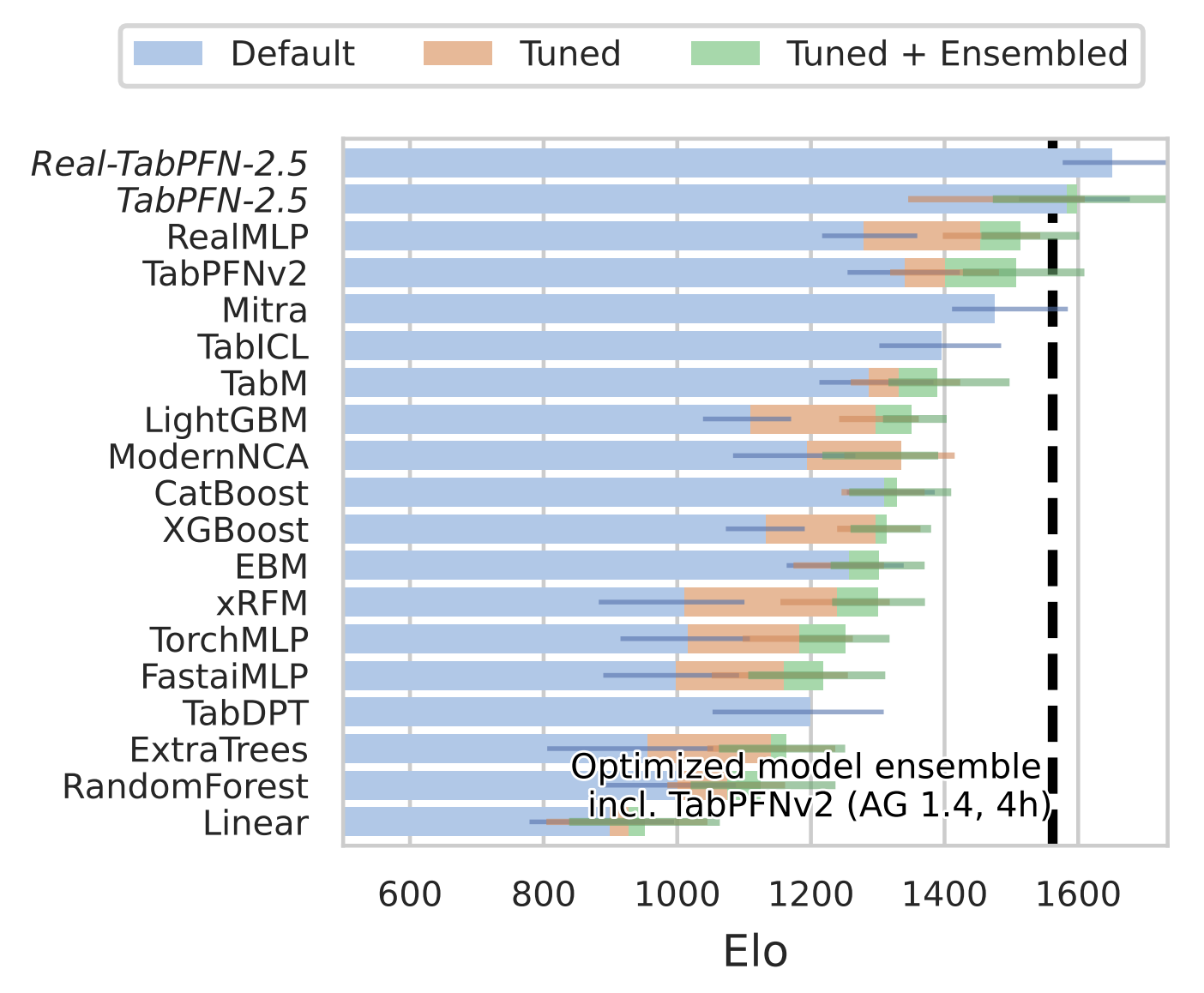

- November 2025: The TabPFN-2.5 model report is out on arXiv! It also received a spotlight at AITD@EurIPS 2025.

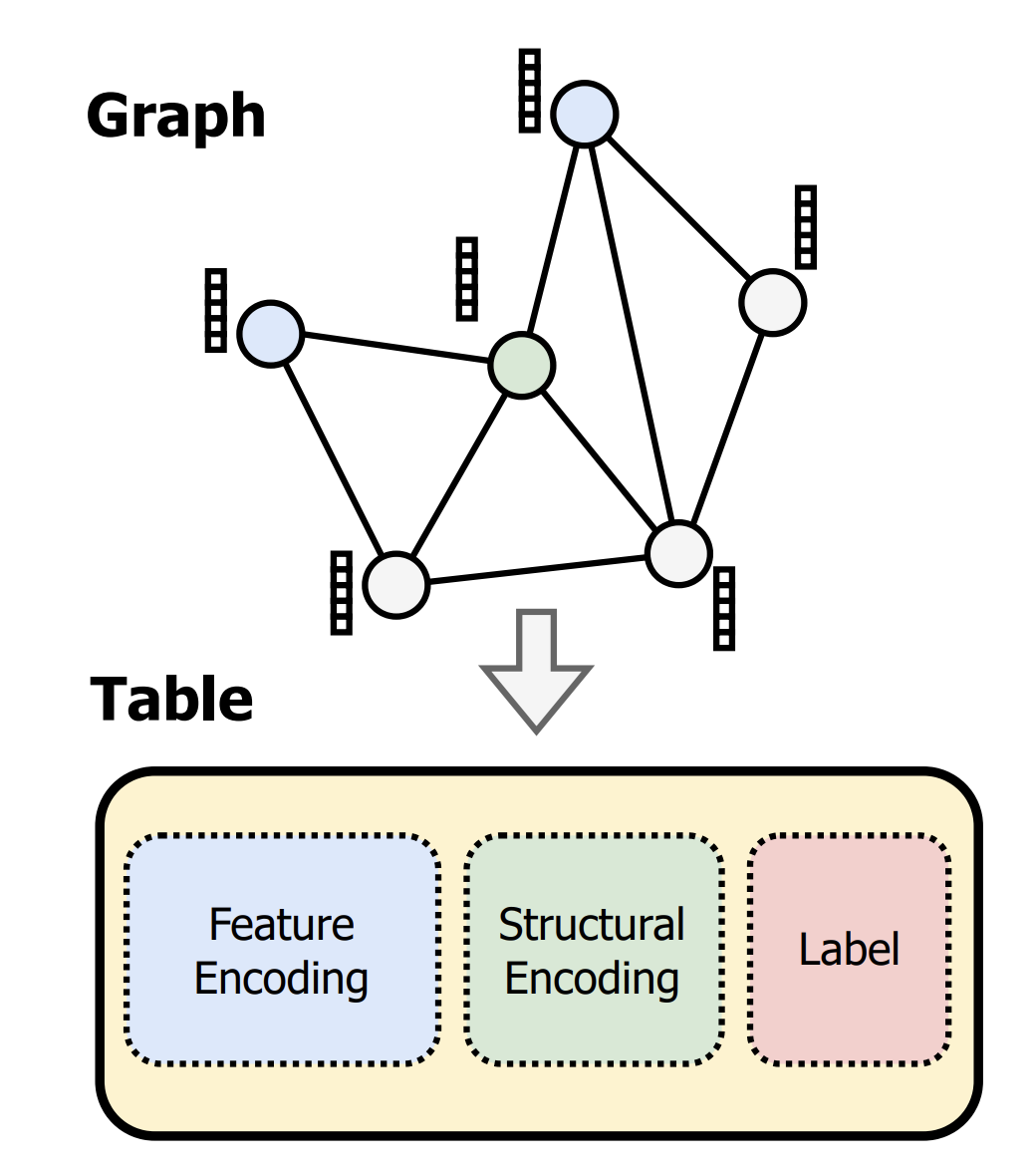

- September 2025: Our paper ‘Bringing Graphs to the Table: Zero-shot Node Classification via Tabular Foundation Models’ has been accepted as an Oral presentation at NPGML@NeurIPS 2025!

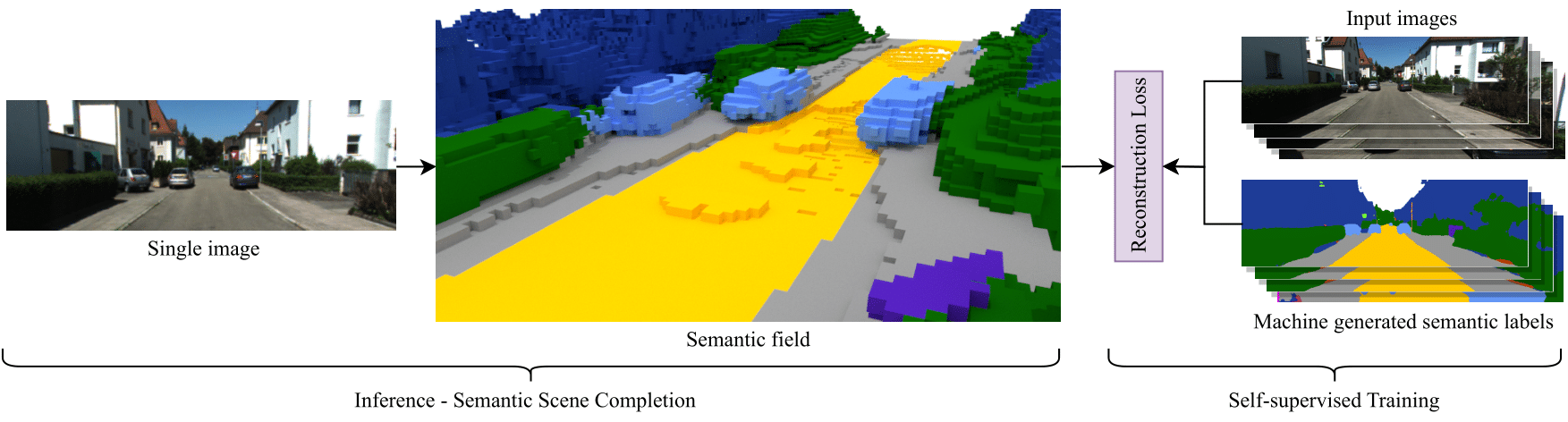

- Janurary 2024: S4C has been selected as a spotlight paper at 3DV 2024!

- November 2023: We have released the code for S4C! We also include the predictions of other state-of-the-art methods on the SSCBench KITTI-360 dataset.

- October 2023: Happy to announce that our paper ‘S4C: Self-Supervised Semantic Scene Completion with Neural Fields’ got accepted at 3DV 2024!

Hello, I'm Adrian Hayler

I am a Research Scientist at Prior Labs working on Tabular Foundation Models.

Before that I completed a MSc in Advanced Computer Science at the University of Oxford and a BSc in Mathematics at the Technical University of Munich (TUM). I was fortunate enough to work conduct research under some amazing mentors: Namely Daniel Cremers, Jakob Foerster, Micheal Bronstein, Christian Rupprecht and Ismail Ilkan Ceylan. Previously, I worked/interned at QuantCo and Jane Street.